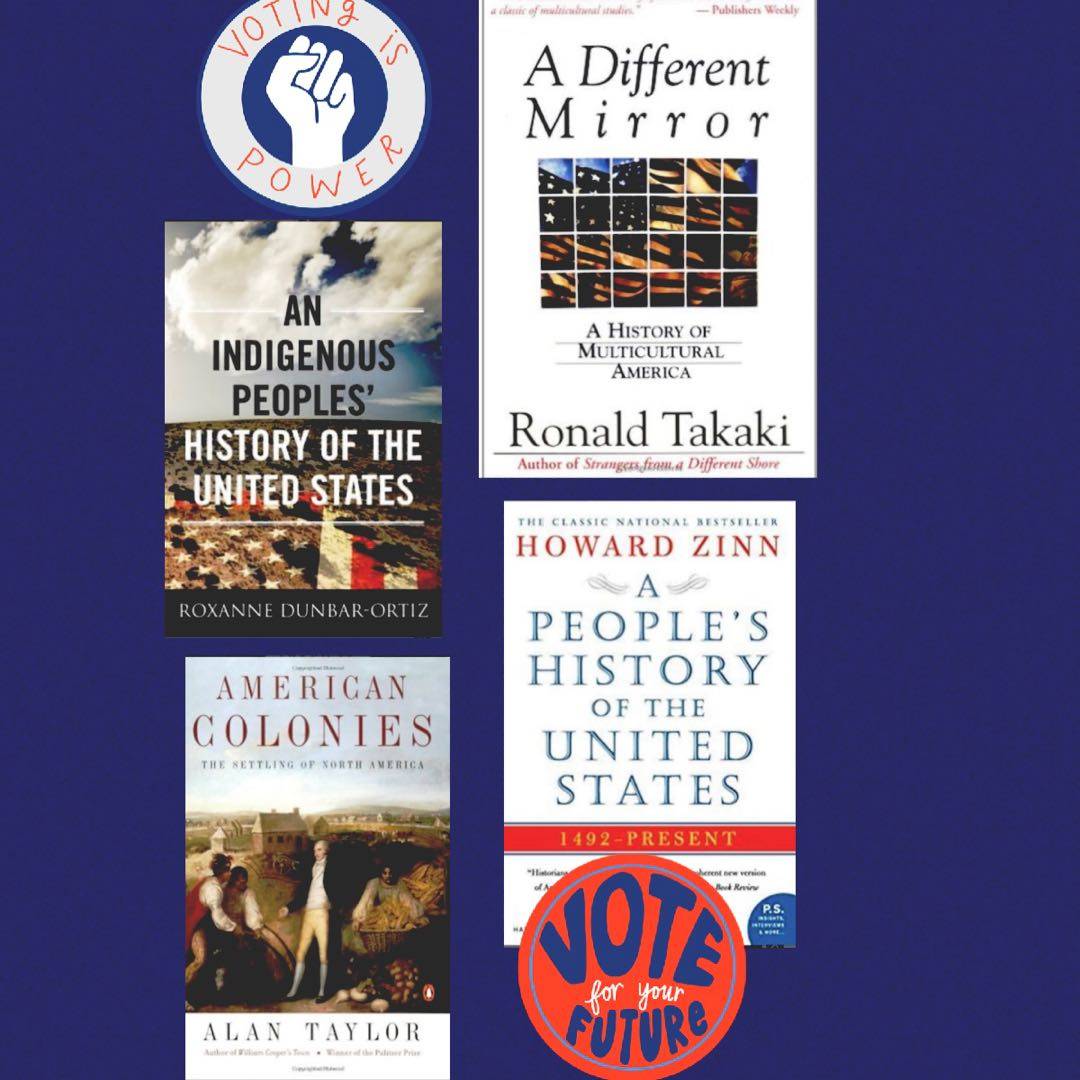

I read American Colonies with my teens for homeschool. I cannot recommend it highly enough for anyone interested in a history of marginalization that was foundational to the beginning of this country. The others are still on my TBR- but represent the education I still need that books will provide. And the reason why I believe no human should be illegal. Please share any books that have inspired you to learn more about the cultures that make up US